Set-Associative Cache in C#, Part 2: Interface Design

This is part 2 of a three-part series on implementing a set-associative cache in C#. In part 1, we looked at how set-associative caches work and sketched out the basic design. In this part, we’ll expand on the design a bit more and define a code interface for the cache. In part 3, we’ll turn the design into working code.

Interface

Now that we have the overall design, it’s time to consider what interface to provide for users of the cache. Let’s consider the methods and properties that a cache type might provide.

-

Methods

- Store items in the cache

- Retrieve items from the cache

- Query for the presence of an item by key

- Remove items from the cache

-

Properties

- Count of items in the cache

- Capacity of the cache

- Number of sets in the cache

- Number of items (ways) in each set

The cache should also provide the means to enumerate over cache items, as well as enumerate keys and value independently. In other words, it should function like an standard .NET generic collection. That means we should implement the interfaces that will allow the cache to work with several extension methods as well as standard enumeration patterns like foreach. Generic collections in the .NET library implement the ICollection<T> interface, and that interface is derived from IEnumerable<T> and IEnumerable. If we derive our cache interface from ICollection<T>, we’ll get a few of the methods and properties that we need, and we’ll be prepared to interoperate with existing .NET code that uses the ICollection, IEnumerable<T>, and IEnumerable interfaces.

Following is the ICollection<T> interface and the two interfaces it derives from, IEnumerable<T> and IEnumerable:

namespace System.Collections {

// Exposes an enumerator, which supports a simple iteration over a non-generic collection.

public interface IEnumerable {

// Returns an enumerator that iterates through a collection.

IEnumerator GetEnumerator();

}

}

namespace System.Collections.Generic {

// Exposes the enumerator, which supports a simple iteration over a collection of a specified type.

public interface IEnumerable<out T> : IEnumerable {

// Returns an enumerator that iterates through the collection.

new IEnumerator<T> GetEnumerator();

}

// Defines methods to manipulate generic collections.

public interface ICollection<T> : IEnumerable<T>, IEnumerable {

// Gets the number of elements contained in the collection.

int Count {

get;

}

// Gets a value indicating whether the collection is read-only.

bool IsReadOnly {

get;

}

// Adds an item to the collection.

void Add(T item);

// Removes all items from the collection.

void Clear();

// Determines whether the collection contains a specific value.

bool Contains(T item);

// Copies the elements of the collection to an array, starting at a particular array index.

void CopyTo(T[] array, int arrayIndex);

// Removes the first occurrence of a specific object from the System.Collections.Generic.ICollection`1.

bool Remove(T item);

}

}We could derive our cache interface directly from ICollection<T>, since it provides several of the methods and properties in the list above. That’s definitely something we want for interoperability, but the problem for day-to-day users is that the methods accept a single generic object type, and our cache is designed to function as a dictionary, which uses keys to store and retrieve values. In part 1, we considered using a KeyValuePair<TKey,TValue> type to store the cache data. Let’s look at how adding a cache item would work in practice using the ICollection<T> interface:

// We have to create a new KeyValuePair for every method call.

coupleCache.Add(new KeyValuePair("Brad", "Angelina"));

// Maybe we could minimize the constructor...

coupleCache.Add(new("Brad", "Angelina"));It works, but it’s clunky. As for Contains and Remove, those are effectively useless because we would either have to know the value of the cache item in order to use those methods (which defeats the entire purpose of the cache), or we would have to specify that those methods would ignore the value. Neither approach is workable.

In part 1, we noted that the cache works a lot like the .NET generic Dictionary type. That collection implements the IDictionary<TKey,TValue> interface:

namespace System.Collections.Generic {

// Represents a generic collection of key/value pairs.

public interface IDictionary<TKey, TValue> : ICollection<KeyValuePair<TKey, TValue>>,

IEnumerable<KeyValuePair<TKey, TValue>>,

IEnumerable

{

// Gets or sets the element with the specified key.

TValue this[TKey key] {

get;

set;

}

// Gets an ICollection containing the keys of the IDictionary.

ICollection<TKey> Keys {

get;

}

// Gets an ICollection containing the values in the IDictionary.

ICollection<TValue> Values {

get;

}

// Adds an element with the provided key and value to the IDictionary.

void Add(TKey key, TValue value);

// Determines whether the IDictionary contains an element with the specified key.

bool ContainsKey(TKey key);

// Removes the element with the specified key from the System.Collections.Generic.IDictionary`2.

bool Remove(TKey key);

// Gets the value associated with the specified key.

bool TryGetValue(TKey key, [MaybeNullWhen(false)] out TValue value);

}

}That looks like exactly what we need. It gives us a method to add an item with the key and value in separate parameters, a method to check for the presence of an item by key, a method to remove an item by key, and a method to retrieve an item’s value by key.

There are two other advantages to using this interface as the basis of our cache. First, it’s a standard interface that would be familiar to experienced .NET developers. Second, it would interoperate with standard .NET libraries, idioms, and extension methods. On the strength of these advantages, we’ll derive our cache interface from IDictionary<TKey, TValue> (and, by extension, from ICollection<T>, IEnumerable<T>, and IEnumerable as well).

The remaining properties that aren’t provided by IDictionary<TKey, TValue> are the capacity, the number of sets, and the number of ways. We’ll add these to our cache interface.

There is also one other method that might be useful. Remember that when we add a new cache item, it might evict an existing cache item. Most of the time it won’t matter what item gets evicted, but there may be scenarios in which we would want to know which item would be evicted if a new cache item were added. To that end, we’ll add a method to support such a scenario and call it TryGetEvictKey, following the pattern of TryGetValue.

Now we can finally define our cache interface, ISetAssociativeCache<TKey, TValue>.

using System.Collections.Generic;

namespace ParksComputing.SetAssociativeCache {

// Represents a generic set-associative cache of key/value pairs.

public interface ISetAssociativeCache<TKey, TValue> : IDictionary<TKey, TValue> {

// Gets the capacity of the cache.

int Capacity { get; }

// Gets the number of sets in the cache.

int Sets { get; }

// Gets the capacity in each set.

int Ways { get; }

// If the given key would cause an existing cache item to be evicted, returns true and sets

// evictKey to the key of the cache item that would be evicted if the new key were added.

bool TryGetEvictKey(TKey key, out TKey evictKey);

}

}This interface will be implemented by concrete classes which provide the behavior of the set-associative cache.

Implementing Cache-Eviction Policies

We discussed in part 1 how cache items are evicted when a new cache item is added to a set which is already full, and there are several policies that can be implemented, depending on the specific use case for the cache. So far we have not addressed how those policies will be provided or implemented.

One method that I played with early on was inspired by Andrei Alexandrescu’s C++ policy-based design as described in Modern C++ Design. It involved supplying a policy class as a generic parameter to the concrete class that implemented ISetAssociativeCache<TKey, TValue>. In practice, instantiating a cache instance would look like the following:

ISetAssociativeCache<string, string> = new SetAssociativeCache<string, string, LruPolicy>(4, 2);Implementing it was a fun exercise, but after playing with it a while I realized that it didn’t really gain me anything. There was no practical difference between the code above and the following code, in terms of what clients had to write:

ISetAssociativeCache<string, string> = new LruCache<string, string>(4, 2);In other words, it made more sense to just put the policy into a concrete class that implemented the cache interface.

The only other variation I could come up with was the scenario of changing the policy at runtime:

ICachePolicy lruPolicy = new LruCachePolicy();

ISetAssociativeCache<string, string> cache = new FlexCache<string, string>(4, 2, LruCachePolicy);

// some time later, for some reason...

cache.Policy = new LfuCachePolicy();I couldn’t come up with a specific reason to do this, but I could at least imagine that it could happen in practice. Even so, that is an implementation detail can be left to specific implementations, so the ISetAssociativeCache<TKey, TValue> interface already provides enough functionality for most mainstream use cases.

The three policies that I’ve implemented so far are least-recently used (LRU), most-recently used (MRU), and least-frequently used (LFU). All three of them ultimately derive from a common class that implements nearly all of the cache code.

There are some key differences in behavior between policies based on recency of use (LRU, MRU) and policies based on frequency of use. The xRU policies based on how recently a cache item was stored, updated, or accessed can be managed by sorting the keys in a set so that the most recently stored, updated, or accessed cache item is moved to the lowest index in the set, pushing the least-recently used item to the highest index. No other metadata needs to be stored. For other policies, such as ones based on how frequently a cache item is used, some data to track the implementation of the policy must be provided.

In order to keep as much common code as possible in a single class, let’s re-evaluate how the key array is stored. In part 1 we considered this layout:

int[] keyArray;I hinted at the time that we would change this definition, and this is why. We need to provide another bit of data alongside the index into the value array. Let’s change the definition to the following:

KeyValuePair<int, int>[] keyArray;Even though LRU and MRU policies don’t use the second int, most other policies will make use of it. LFU will increment it each time a cache item is accessed. Policies based on a cache item’s age might use the second int to store a date/time stamp.

The Class Structure

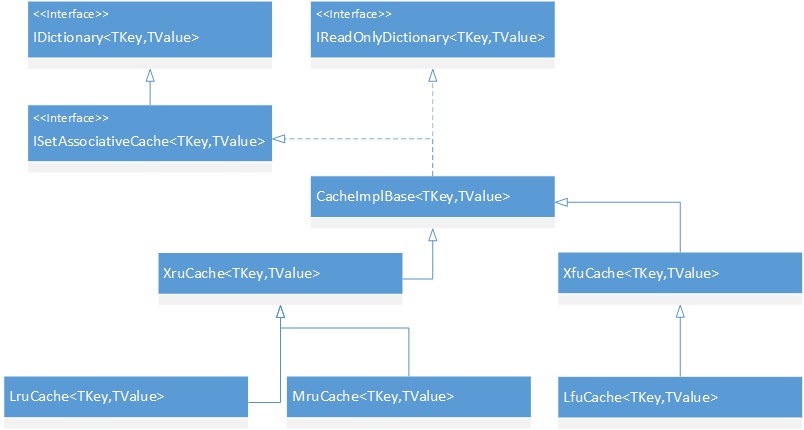

Given that xRU policies and xFU policies diverge on how they track aging of cache items, but otherwise share the vast majority of the rest of their code, we can consider a class structure like the following:

The only class here that we haven’t discussed is IReadOnlyDictionary<TKey, TValue>. This allows clients to share the cache in a read-only manner, such that clients may access the data in the cache without being able to modify it.

Coming Up

In the next article, we’ll finally implement the class structure above and try the cache in some sample code.